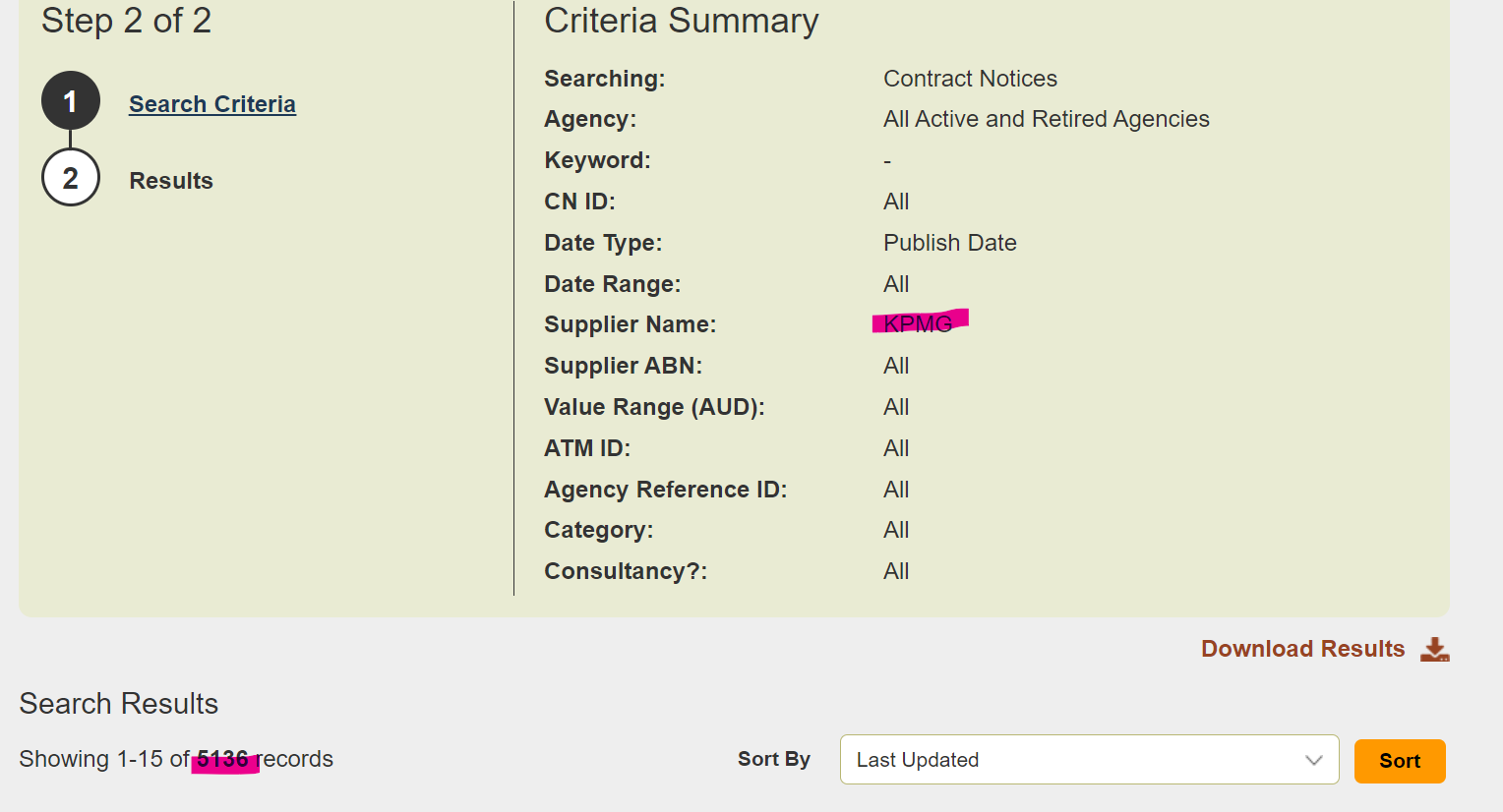

The Seven Year Itch (For Hardware)

Often I buy more hardware than I need. Actually, strike

that – I always buy more hardware than I need. It’s a

disease, really. This particular affliction manifested in 2018 when I

pre-ordered a Turing Pi 1 because I

had convinced myself that building a Raspberry Pi cluster would be the

perfect way to learn Kubernetes.

It was not the perfect way.

Little did I realize that it would take me seven years to gather all seven Raspberry Pi Compute Module 3+ boards and finally bootstrap a k3s cluster. In that time:

Kubernetes went through approximately 47 major versions

The Raspberry Pi 4 and 5 came out (and experienced their own chip shortages)

I discovered my Turing Pi board had a faulty ethernet switch

I aged visibly, just look at my github profile and videos from recent conference presentations.

The Recipe for Homelab Kubernetes Suffering

In this write-up, I’ll outline what it actually takes to set up a Raspberry Pi 3+ cluster in 2025. Consider this a cautionary tale wrapped in a tutorial. I’ll probably resell this now-functioning cluster to another masochist – er, enthusiast – and use the recouped capital to buy something newer that will sit on my shelf for another seven years.

Step 1: Acquire the Base Board

The Turing Pi 1 was a great option back in the day. It’s a mini-ITX form factor board that accepts up to 7 Raspberry Pi Compute Modules in SODIMM slots. The on-board gigabit ethernet switch was supposed to be the killer feature – no external networking required!

Pro tip: Make sure the on-board switch actually works. Test it before you commit to this path. Mine didn’t, which I discovered approximately 6 years too late.

Step 2: Collect Your Compute Modules (Like Pokemon, But Expensive)

You’ll need Raspberry Pi Compute Module 3+ boards. The Turing Pi 1 can handle up to 7 of them. I sourced mine from Mouser Electronics, though availability has been… variable… over the years.

I really wish there were more alternatives in the SODIMM compute module format. If you’re in the business of making one with a newer processor and more RAM, let’s talk. Seriously. My DMs are open.

Step 3: Flash the OS (The Easy Part, They Said)

The Compute Modules have onboard eMMC storage, which is the preferred boot device. Trying to use SD cards will lead to disappointment, inconsistent boots, and existential questioning of your life choices.

Here’s the gear you’ll need:

A Compute Module IO Board - Something like the Waveshare CM3/CM3+ IO Board or the official Raspberry Pi IO Board to put the module in USB mass storage mode

rpiboot/usbboot - The tool that makes the eMMC appear as a USB drive

Raspberry Pi Imager - The official tool for flashing OS images

Critical step: Bake in your SSH public key during the imaging process. This will save you from having to find 7 spare HDMI cables and keyboards. The Pi Imager has a settings gear icon that lets you configure hostname, SSH keys, and WiFi – use it.

# Generate an SSH key if you don't have one

ssh-keygen -t ed25519 -C "kubernetes-cluster"Step 4: Network Configuration (Here Be Dragons)

Plug in all the modules and fire up the on-board Turing Pi ethernet. If you’re lucky, the on-board network works and you can access all the nodes. Marvel at how easy this was.

If you’re me, you’ll discover the switch is dead and enter the seven stages of homelab grief:

Denial: “It’s probably just a loose connection”

Anger: Unprintable + emails to Turing Pi support and learning that the board is End-of-Life and unsupported.

Bargaining: “Maybe I only need 4 nodes anyway”

Depression: stares at pile of unused compute modules

Acceptance: “I guess I’m buying USB ethernet adapters”

The Workaround: Get a bunch of USB-to-Ethernet adapters like the TP-Link UE300 and wire them into an external switch.

Unfortunately, only 4 of the compute modules have their USB ports exposed on the Turing Pi 1. For the other 3, you’ll need to do some creative soldering to expose the USB D+/D- and power lines. That’s just 12 more flying wires on the board. What could go wrong?

Step 5: The Case Mod (Optional But Satisfying)

I got a nice acrylic case to put it all in. It has a fan connection on top for cooling, which you’ll need because 7 Pi’s generate surprising heat.

There were no extra slots for the 3 additional USB connections I needed. But I have a Dremel, two weeks of Christmas holidays, and absolutely no fear of voiding warranties.

Step 6: Actually Installing Kubernetes (The Easy Part, For Real This Time)

With SSH keys baked in, installing k3s is delightfully straightforward using k3sup (pronounced “ketchup”, because of course it is).

# Install k3sup

curl -sLS https://get.k3sup.dev | sh

sudo install k3sup /usr/local/bin/

# Bootstrap the first node as the server

k3sup install --ip 192.168.1.101 --user k8s

# Join additional nodes as agents

k3sup join --ip 192.168.1.102 --server-ip 192.168.1.101 --user k8s

k3sup join --ip 192.168.1.103 --server-ip 192.168.1.101 --user k8s

# ... repeat for remaining nodesk3sup SSHes into each machine, downloads the necessary bits, and bootstraps a low-resource-friendly cluster with etcd (or SQLite) as the datastore. It’s genuinely magical compared to kubeadm.

Reality check: After the k3s install, the Pi 3 doesn’t have much headroom left for actually running applications. We’re talking about 1GB of RAM shared between the OS, kubelet, and your workloads. It’s a great testbed for learning the k3s API and running ARM binaries natively, but don’t expect to run your company’s microservices on it.

The Final Result

After seven years of procrastination, hardware hunting, debugging dead ethernet switches, creative soldering, and Dremel work, I finally have a working 7-node Kubernetes cluster.

It also serves as a rather festive Christmas decoration with the green PCB and red blinking LEDs. Very on-brand for the holidays.

What’s Next?

Hopefully I’ve been a good boy this year and Santa will bring me some newer clustering hardware to play with. The Turing Pi 2.5 looks tempting with its support for CM4, Jetson, and the Turing RK1 modules.

But knowing me, I’ll buy it in 2025 and finally get it working by 2032.

Hardware Shopping List

For anyone brave enough to follow this path, here’s what you’ll need:

| Item | Link | Notes |

|---|---|---|

| Turing Pi 1 Board | Turing Pi | Check if ethernet works! |

| Raspberry Pi CM3+ (x7) | Mouser | 8GB/16GB/32GB eMMC options |

| CM IO Board for flashing | Waveshare | Or official RPi IO Board |

| USB Ethernet Adapters | Amazon | Just in case |

| Ethernet Switch | Your choice | 8+ ports recommended |

| Acrylic Case | Various | With fan for cooling |

Software & Tools

Raspberry Pi Imager - OS flashing tool

rpiboot/usbboot - For eMMC flashing

k3s - Lightweight Kubernetes distribution

k3sup - k3s installer via SSH

etcd - Distributed key-value store

Feel free to ping me with your own homelab Kubernetes horror stories. Misery loves company.