Yesterday we had the day long

Adelaide Radar Research Centre opening ceremony. A self-funded collaborative research centre directed by my PhD supervisor Doug Gray. The talks ranged from highly theoretical to commercial to what reduced to a company ad (even though the speaker promised to not make it so).

I found the first talk most interesting, probably beacuse I was most attentive at the beginning of the day and it presented an irrevocable commercial success of Radar research.

GroundProbe discussed their mine wall stability radar and how it came into being from concept to highly user friendly truck mountable versions.

The next talk was from

Simon Haykin, dealing with the superbly abstracted Cognitive Radar. He kept drawing parallels with the visual brain and talking about layers of memory and feedback. At the end of the day, one of my colleagues asked if the Cognitive Radar is paralleled by the visual brain then where is the transmitter ? Auditory brains of bats might have been a better analogy.

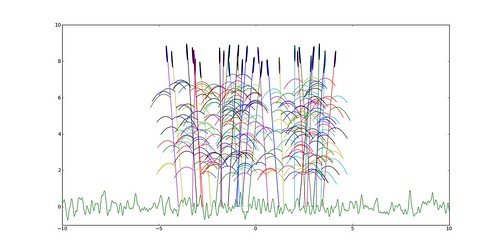

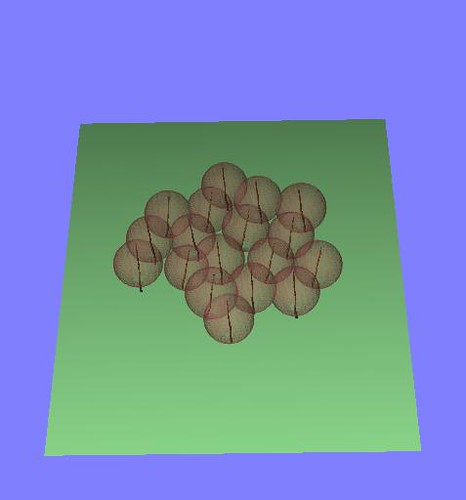

The last talk before lunch Marco Martorella talked about ISAR and how ISAR techniques can be used to sharpen up blurred SAR images of targets under motion, such as moored ships rocking. However the ISAR results seem to flatten the image to a given projection plane and issues concerning 3D structure estimation came up. There seems to be no acceptable solution to this yet. May be some of the work done in Computer vision can help in this regard, particularly

Posit and friends.

The energy level dropped a bit after the lunch break. Several people from DSTO took off to get back to work, some came in. The mixture in the audience changed slightly. The afternoon talks covered Raytheon, BoM and the Super-DARN system.

The guy from Raytheon talked about their huge scope in Radar systems, from the tiny targeting radars in aircrafts to GigaWatt beasts which require their own nuclear power plant. As well as their obsession with

Gallium-Nitride and heat dissipation.

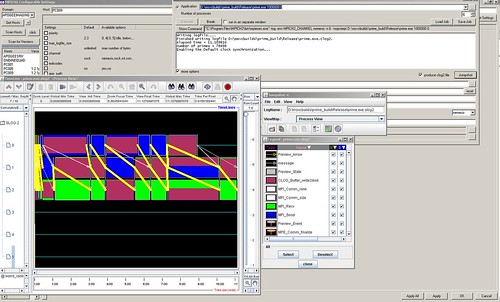

BoM fellow talked about the weather

radar network which we have all come to know and love. Starting from the origins in World War II to the networked and highly popular public system it is today. They seemed to have a projective next hour forecast of precipitation along the lines I had imagined before, but only for distribution to air-traffic control and such.

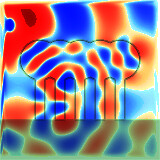

The last presentation about

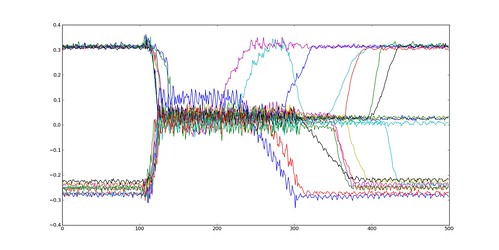

SuperDARN had the most animated sparkle I have seen in a Radar presentation. It featured

coronal discharges from the sun, interactions with the Earth's magnetic field (without which we would be pretty char grilled) and results of the fluctuations in the polar aurora.

Overall it was pretty interesting and diverse day. At the end of the day we were left discussing the vagaries of finishing a PhD and the similarities to Xeno's Paradox.