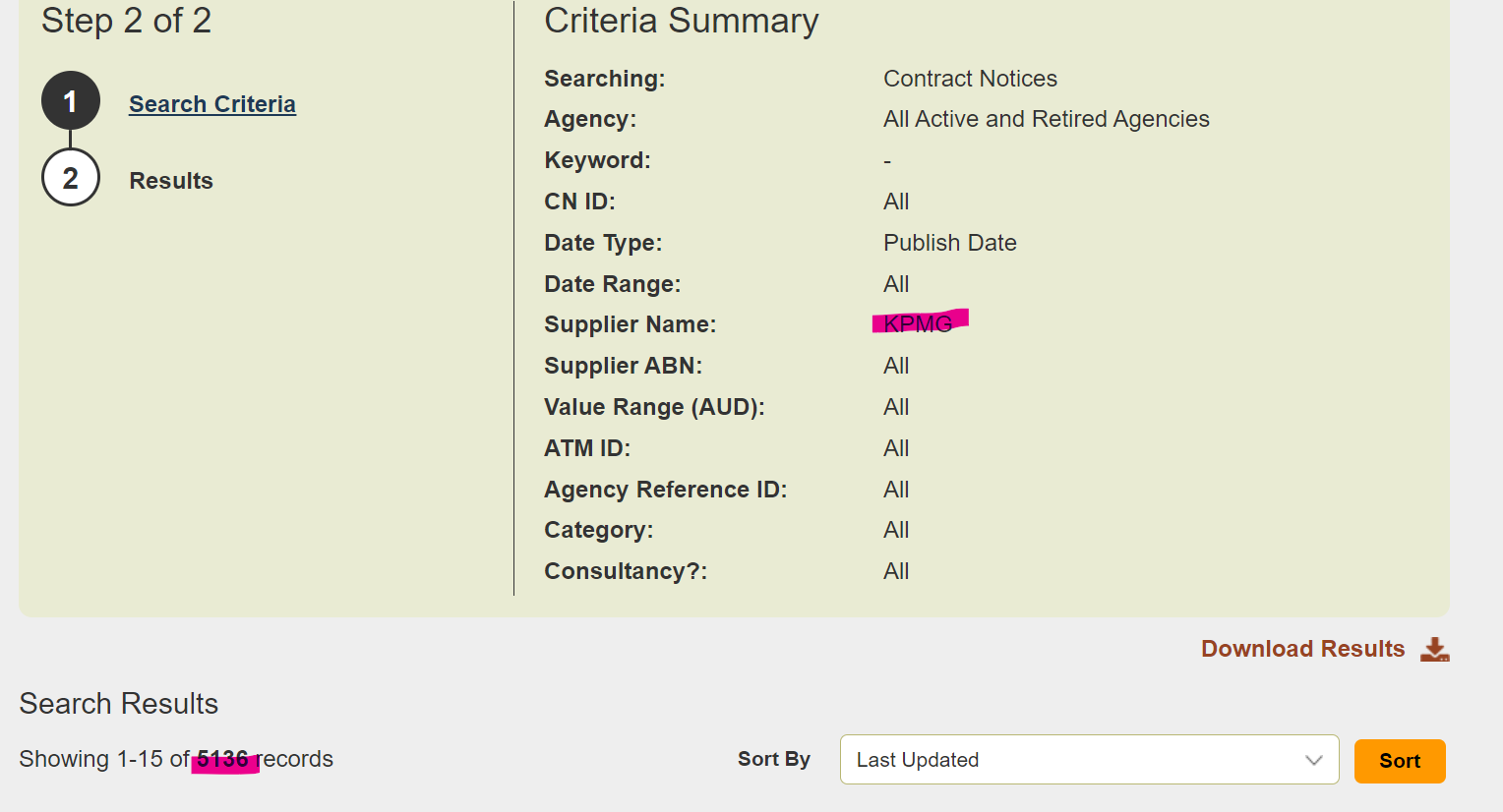

The AusTender analyser started life as a straight HTML scraper built with Colly, walking the procurement portal page by page. It worked, but it was always one redesign away from a slow death: layout shifts, odd pagination edges, and the constant need to throttle hard so I could sleep at night.

Then the Australian Government exposed an Open Contracting Data Standard (OCDS) API. That changed the whole game. Instead of scraping tables and div soup, I can treat the portal like a versioned data feed.

Part of why I care: I am kind of fascinated by government spending as a system. Budgets read like a mixture of engineering constraints and political storytelling, and I keep wanting to trace the thread from “budget line item” to “actual contract award” without hand-waving. The Treasurer’s Final Budget Outcome release (2022-23, “first surplus in 15 years”) is exactly the sort of headline that makes me want to drill down into the mechanics: Final Budget Outcome shows first surplus in 15 years.

So the redesign in austender_analyser

does three things differently:

- Fetch via OCDS, not HTML: Reduce breakage by consuming the API’s canonical JSON, not scraped pages.

- Persist to Ducklake: Store releases, parties, and contracts in Ducklake so you can query locally without rerunning the whole pipeline. This does not quite work yet; I am treating it as a learning exercise with Ducklake. It is much easier to learn on a real problem than on toy demo datasets.

- Treat caching as optional: Counterintuitively, the local cache is sometimes slower than pulling fresh data. Ducklake’s startup and query overhead can outweigh a simple, parallelized upstream call. The new design keeps the cache but makes it opt-in and measurable.

If you prefer Python, the upstream API team ships a reference

walkthrough in the austender-ocds-api

repo (see also the SwaggerHub

docs and an example endpoint like findById).

Why move off Colly?

- Scraping HTML is like doing accounting by screenshot. OCDS is the ledger export.

- Less breakage: OCDS is documented and versioned; DOM scraping is brittle.

- Faster iteration: You model on structured data immediately, not after a fragile extraction layer.

- Clear rate behavior: You can respect API limits without guessing at dynamic page loads.

Why keep Ducklake in the loop?

Ducklake is the reproducibility knob. It lets me freeze a snapshot, replay transforms, and run offline queries when I am iterating on analysis (or when the upstream is slow, or when I just do not want to be a bad citizen).

But caches are not free. Ducklake has startup and query overhead, and that can be slower than simply pulling fresh JSON in parallel. So the pipeline treats Ducklake like a tool, not a religion: measure the latency, pick the faster path, keep an escape hatch when you need repeatability.

Current flow

- Pull OCDS releases in batches, keyed by release date and procurement identifiers.

- Normalize the JSON into Ducklake tables (releases, awards, suppliers, items).

- Emit lightweight summaries for quick diffing between runs.

Lessons learned

- A stable API beats heroic HTML scraping almost every time. Even in times of AI and (firecrawl)[https://www.firecrawl.dev/].

- Caches are not free; measure them. Sometimes stressing the upstream lightly is faster and still acceptable within published rate limits.

- Keep exit hatches: allow forcing cache use, bypassing it, and snapshotting runs for reproducibility.

Next steps: Going deeper : tighten validation against the OCDS schema, add minimal observability (latency histograms for API vs cache), and ship a “fast path” mode that only hydrates the fields needed for high-level spend dashboards. Going broader : find sites and build API and Web aggregators for Australian state tender sites (e.g. VicTender and international ones.

No comments:

Post a Comment