I have been spending time building out the V93XX_Arduino library for Vangotech energy-monitoring ASICs, and this is one of those projects where tooling makes or breaks momentum.

The pairing that worked best for me was:

- GitHub Copilot for repetitive protocol code, tests, and workflow glue

- arduino-cli for deterministic compile and upload loops

- Saleae Logic Analyzer captures to confirm what is actually on the wire

The short version: Copilot gets you to a plausible driver quickly, but the logic analyzer gets you to a correct driver.

Why this trio works

Developing protocol drivers is usually a game of “spec says one thing, silicon does another thing”.

If you rely only on serial logs, you can miss timing and framing issues. If you rely only on captures, you can waste hours writing boilerplate and one-off scripts. Using these three together gives a tighter loop:

- Copilot proposes code and tests from your intent

- Arduino CLI compiles and flashes quickly from scripts

- Saleae confirms framing, parity, baud behavior, and CRC bytes

- Copilot helps refactor after you learn what the bus is doing

In practice, this turned the V9381 UART ChecksumMode and waveform/FFT work from a stop-start activity into a repeatable pipeline.

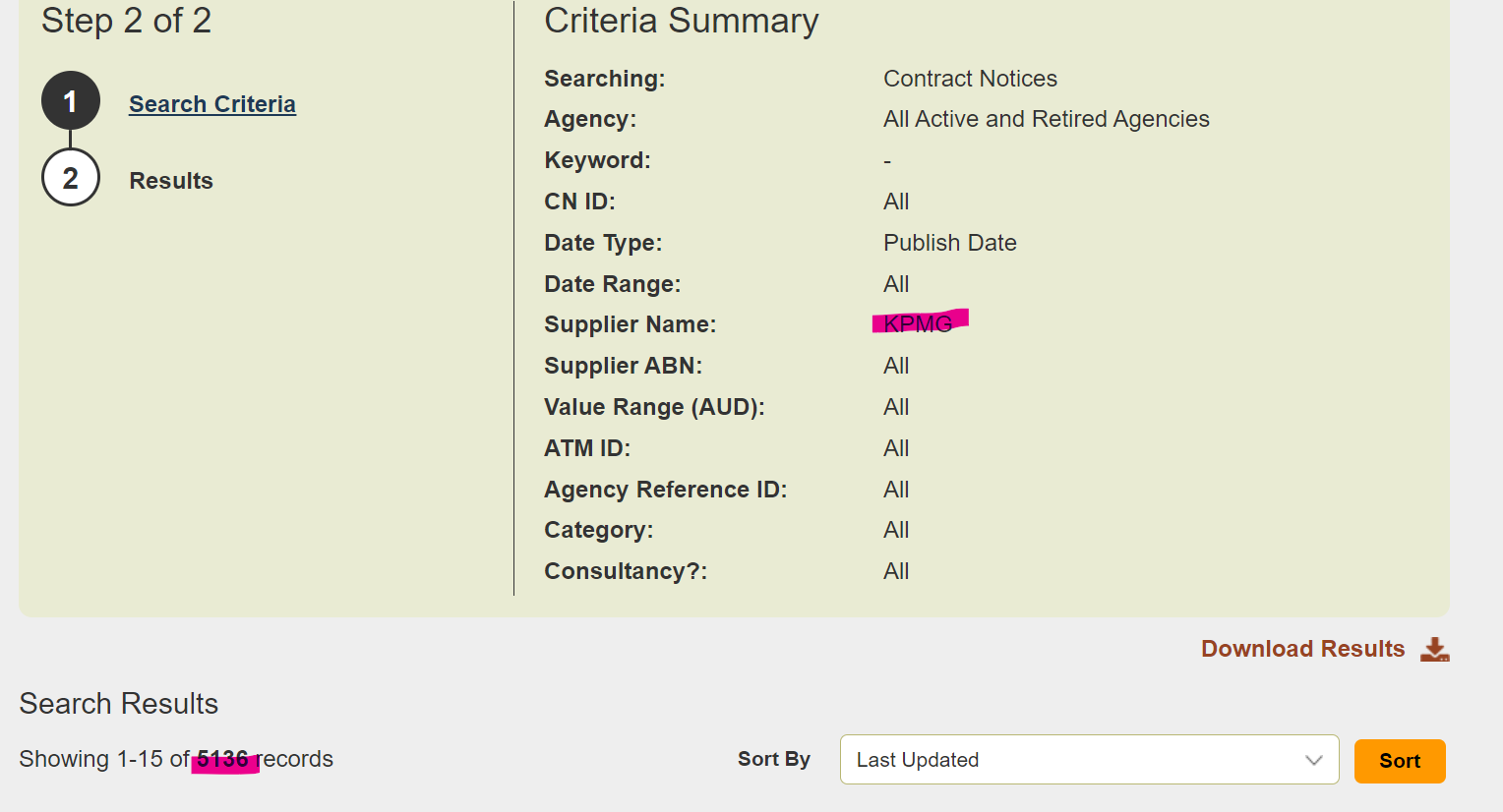

Project context: V93XX_Arduino

Repository: whatnick/V93XX_Arduino

The library currently covers:

V93XX_UARTfor V9360 and V9381 UART modes (including address pins and checksum modes)V93XX_SPIfor V9381 SPI paths (faster acquisition, up to MHz clocks)- Examples for baseline comms, waveform capture, and FFT

Useful docs in the repo:

Practical workflow

1) Start with a machine-checkable baseline

Before touching hardware, run the local CRC and framing tests.

python tools/test_checksum_mode.pyIf this is red, do not flash anything yet.

2) Use Copilot for structured implementation work

I get better results when prompts are specific and constrained. Example prompt:

Implement V9381 UART read path with explicit ChecksumMode behavior.

Keep Clean mode strict and Dirty mode permissive.

Add unit tests for CRC-8 edge cases and frame parsing.

Do not change public method names.Copilot is strongest here at:

- Building test scaffolding quickly

- Keeping repetitive register access code consistent

- Producing incremental refactors after captures reveal protocol edge cases

3) Consume datasheets with PDF MCP and keep them in-repo

Before generating more driver code, I now ingest the vendor datasheet with pdf-reader-mcp so Copilot can work from extracted register tables and command framing notes.

My workflow is:

- Commit the original datasheet PDF to the repository, for example

under

docs/datasheets/v93xx/. - Use PDF MCP to extract the high-value sections (register map, UART/SPI timing, checksum/CRC rules, waveform buffer details).

- Save extracted artifacts back into the repo as text or markdown

notes, for example

docs/datasheets/v93xx/register_notes.md. - Prompt Copilot using both code and extracted notes so generated changes are tied to concrete datasheet language.

This gives you a versioned paper trail from silicon docs to driver behavior, which is very useful when reconciling captures from the logic analyzer.

4) Compile and flash with Arduino CLI

For ESP32-S3 targets, this keeps the build deterministic and scriptable:

arduino-cli board list

arduino-cli compile --fqbn esp32:esp32:esp32s3 examples/V9381_UART_DIRTY_MODE

arduino-cli upload --fqbn esp32:esp32:esp32s3 --port COM3 examples/V9381_UART_DIRTY_MODEPowerShell users can run the project helper script:

.\tools\run_automated_tests.ps1 -Port COM3That pipeline can run unit tests, compile, upload, serial verification, and optional capture/analysis phases.

5) Validate on-wire behavior with Saleae

The logic analyzer phase is where protocol assumptions get tested.

For UART work:

- Confirm bus settings (baud, parity, stop bits) match the target mode

- Verify frame boundaries and inter-frame timing

- Check CRC byte placement and value against expected payload sum/complement logic

If using the helper scripts in this repo:

python tools/capture_v9381_uart.py

python tools/analyze_checksum_captures.py [capture_dir]This gives a concrete report of expected vs observed CRC and whether behaviour matches Clean vs Dirty semantics.

What changed in my debugging habits

Old approach

- Edit sketch

- Upload

- Stare at serial output

- Guess

New approach

- Ask Copilot for narrow, test-backed change

- Build and flash with Arduino CLI from script

- Capture with Saleae and compare against expected frame math

- Feed mismatch details back into Copilot for targeted fixes

It feels closer to hardware TDD than trial-and-error firmware hacking.

Trade-offs and cost notes

- Arduino CLI is free and excellent for repeatable CI-style local loops

- Copilot is a paid productivity tool, but pays for itself when protocol code churn is high

- Saleae hardware is not cheap, but it can save days when parity/timing/CRC bugs are subtle

If you are doing serious serial or SPI driver work, a logic analyzer is not optional for long.

Troubleshooting notes that keep coming up

- Wrong serial configuration (especially parity) can look like random CRC failure.

- Upload success does not imply runtime protocol correctness.

- Dirty mode can hide issues by design; keep a Clean mode path for strict validation.

- Keep datasheet extracts in-repo so Copilot prompts reference real tables, not memory.

- A scripted workflow (

pdf-reader-mcp+arduino-cli+ capture + analysis) beats ad-hoc manual steps every time.

Closing

For this V93XX work, the winning combination was AI-assisted coding plus old-school instrumentation.

Copilot helps me move faster, Arduino CLI keeps the loop reproducible, and Saleae captures keep me honest.

If you are building protocol drivers, treat those as complementary tools, not alternatives.