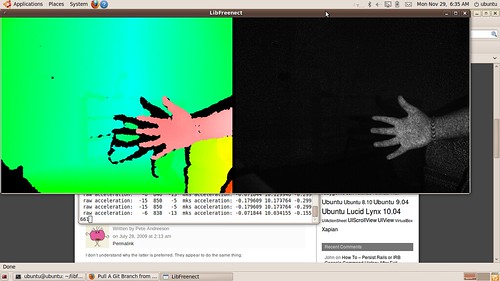

The IR sensor on the Kinect can now pull images illuminated by the laser. The camera needs to be initialized with a separate command to fall into IR mode, search is also on for higher resolution streams than 640x480. I have made some headway towards a v4l2 wrapper for the Kinect streams using AVLD. This involves capturing data using libfreenect and writing it to the pseudo-device created by avld.

This week I am also at the CSIRO Cop meeting at ANU in Canberra. There will be 1.5 days of chats and some informal follow-ons. This mostly a programming and data management community with a pile of Matlab, Unix scripters, web-service developers and database admins. The first talk was about eResearch and corporate infrastructure to support it. The second talk was from the legal section covering open source and open data, terms like encumberances, freedom to operate (FTO) and due diligence. This talk covered licences and caused a fair bit of discussion.

The third talk was about OffSiders, which feels like a new object based scripting language. It will require some experimenting to work out what it is good for. The persistence is non-atomic and exists as a transaction as supported by the filesystem. It has a pure computer science glow and will definitely trigger some "what is like ?" buttons.

Monday, November 29, 2010

Saturday, November 27, 2010

Joys of cleaning the house - Nuking non-compliant code

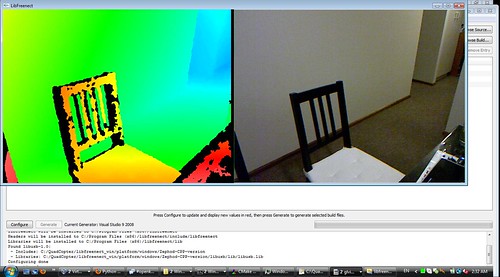

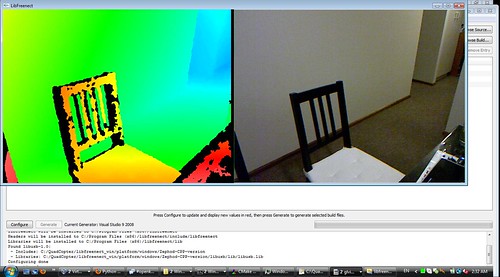

The openkinect project is nascent and as such the win32 platform support is still stabilizing. Due to the naughty behaviour of libusb on windows several attempts have been made so far to create common API across platforms without a simple maintainable and working version emerging.

I decided to learn about kinect, coding on github and merging things in general by taking Zephod's code and putting it in a win32 folder under the library source. I got most of the demo code compiling and running with this approach. I took a master piece of my Ikea chair in depth on Vista 64, apparently a rather difficult OS to get to work on. By then my git code tree had become cluttered with lots of merges, I was working off 3 remotes (my own fork, Zephod and mainline). With some nudging from this video, I deleted all copies of my work into oblivion. I can't control Google cache and such but it felt liberating.

I decided to learn about kinect, coding on github and merging things in general by taking Zephod's code and putting it in a win32 folder under the library source. I got most of the demo code compiling and running with this approach. I took a master piece of my Ikea chair in depth on Vista 64, apparently a rather difficult OS to get to work on. By then my git code tree had become cluttered with lots of merges, I was working off 3 remotes (my own fork, Zephod and mainline). With some nudging from this video, I deleted all copies of my work into oblivion. I can't control Google cache and such but it felt liberating.

Tuesday, November 23, 2010

Python Bindings for Accidental API - and vivi.c

I started a couple of Kinect related projects. Firstly, writing linux kernel driver based on v4l2 and cloning vivi.c to act as a stub. The responses indicate that a gspca based approach might be better, but I had a lot of fun hand hacking the vivi.c code and adding 2 video devices from 1 driver. I will have to wait till someone puts some gspca stub code out.

Secondly, I completed a first working version (as in can get some image buffers) of the win32 python binding from Zephod's code. I followed the swig how-to's and had to resort to typemapping to extract the image and depth buffers, but it all worked out in the end. Not quite as well as I had expected on the 1st attempt but I can replicate some of the early work on Linux in windows now. The colour's in the RGB image are flipped, I will have to change the data order. A lot of frames are dropped so the response is not as smooth as it could be.

Secondly, I completed a first working version (as in can get some image buffers) of the win32 python binding from Zephod's code. I followed the swig how-to's and had to resort to typemapping to extract the image and depth buffers, but it all worked out in the end. Not quite as well as I had expected on the 1st attempt but I can replicate some of the early work on Linux in windows now. The colour's in the RGB image are flipped, I will have to change the data order. A lot of frames are dropped so the response is not as smooth as it could be.

Sunday, November 21, 2010

Hooking Kinect into Languages - Python and Matlab

I spent the morning wading through SWIG to hook up Zephod's win32 Kinect code into Python. After getting the interface file and Cmake in place, I got unsupported platform type from the MSVC compiler.

I will also need to get some Matlab integration via Mex files in place to allow classic academic usage and student projects rolling. With my budget constraints and time constraints I can offer a small bounty to get this done. Contact me if interested.

Also on the todo list is v4l2 integration and a gstreamer plugin. The principle of operations of these drivers is simple, allocate a buffer, fill it up with data from the kinect and push it up the chain for further processing instead of just displaying as current things do.

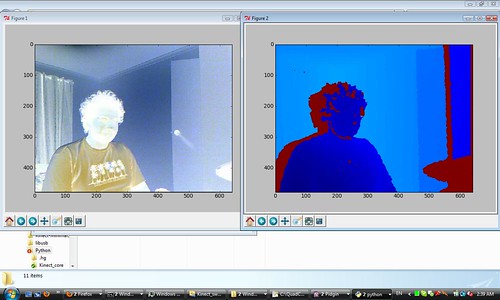

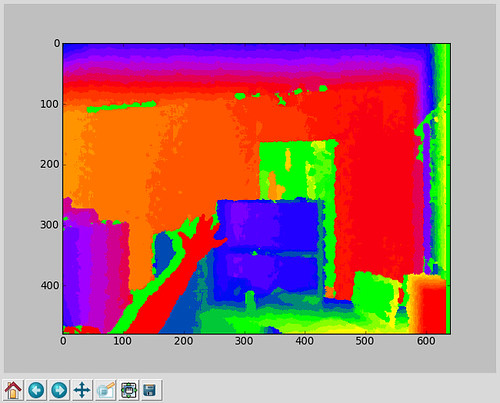

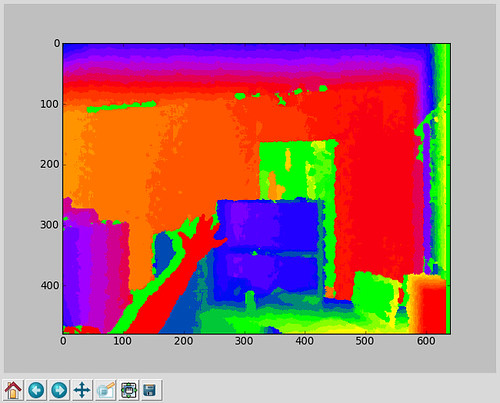

The new python wrappers for libfreenect allow me the luxury of using matplotlib colourmaps to redisplay the depth as I see fit. It introduces lags, but who cares we have depth in Python for $200.

I will also need to get some Matlab integration via Mex files in place to allow classic academic usage and student projects rolling. With my budget constraints and time constraints I can offer a small bounty to get this done. Contact me if interested.

Also on the todo list is v4l2 integration and a gstreamer plugin. The principle of operations of these drivers is simple, allocate a buffer, fill it up with data from the kinect and push it up the chain for further processing instead of just displaying as current things do.

The new python wrappers for libfreenect allow me the luxury of using matplotlib colourmaps to redisplay the depth as I see fit. It introduces lags, but who cares we have depth in Python for $200.

Friday, November 19, 2010

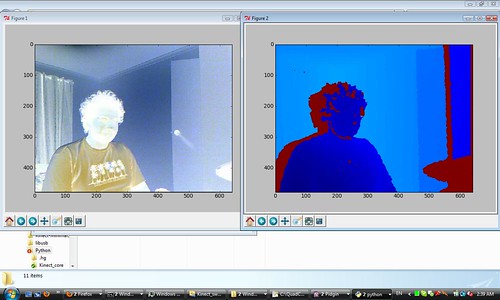

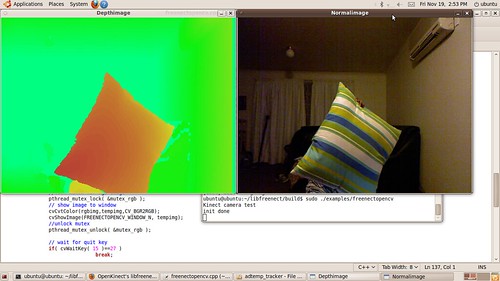

Getting Data from Kinect to OpenCV

A fair bit of work has already been done in simple template based object identification with the Kinect and even Skeleton tracking. I finally got my hands on one today from JB Hi-Fi, and paid a few bucks extra for it. Harvey Norman finally got them in stock at the end of the day. Terrible logistics or favouritism to employees, or something in-between. Anyway I can now get a 2nd one from the pre-order. Apparently a single USB 2.0 bus is insufficient to handle the stream from 2 Kinects. I did some simple experiments with OpenCV, just adding some hue mapping and swapping the RGB channels, tomorrow we are having the 12Hr Hackathon at Adelaide Hackerspace, might get to make a lantern controller then.

A fair bit of work has already been done in simple template based object identification with the Kinect and even Skeleton tracking. I finally got my hands on one today from JB Hi-Fi, and paid a few bucks extra for it. Harvey Norman finally got them in stock at the end of the day. Terrible logistics or favouritism to employees, or something in-between. Anyway I can now get a 2nd one from the pre-order. Apparently a single USB 2.0 bus is insufficient to handle the stream from 2 Kinects. I did some simple experiments with OpenCV, just adding some hue mapping and swapping the RGB channels, tomorrow we are having the 12Hr Hackathon at Adelaide Hackerspace, might get to make a lantern controller then.

Tuesday, November 16, 2010

Preparing to hack the Kinect - OpenKinect on MinGW32

My Kinect is on pre-order, I can only get my hands on it tomorrow. That hasn't stopped me from hacking up the OpenKinect code on my Virtualbox Ubuntu and MinGW. There are multiple API's for it by now in C, Python, Java and C#. I choose to use the C version for easy portability and common Windows/Linux version, as well as the luxury of using CMake.

Here are the steps to get the glview kinect code compiled with MinGW32:

Here are the steps to get the glview kinect code compiled with MinGW32:

- Grab the OpenKinect code from Git, using MsysGit or similar.

- Grab the windows compatible libusb, I got the precompiled version to save time.

- You may or may not have GLUT, if you don't grab the libraries here and use reimp to make them MinGW32 compatible.

- Add a win32 specific linker line into CMake which reads: target_link_libraries(glview freenect OpenGL32 glut32)

- Configure using CMake, point to where libusb is, compile and you are ready to possibly enjoy OpenKinect on windows. I make no guarantees on whether it will actually work yet. If you have Windows and MinGW try it and let me know.

AR2C Opening Ceremony - TechTalkFest

Yesterday we had the day long Adelaide Radar Research Centre opening ceremony. A self-funded collaborative research centre directed by my PhD supervisor Doug Gray. The talks ranged from highly theoretical to commercial to what reduced to a company ad (even though the speaker promised to not make it so).

I found the first talk most interesting, probably beacuse I was most attentive at the beginning of the day and it presented an irrevocable commercial success of Radar research. GroundProbe discussed their mine wall stability radar and how it came into being from concept to highly user friendly truck mountable versions.

The next talk was from Simon Haykin, dealing with the superbly abstracted Cognitive Radar. He kept drawing parallels with the visual brain and talking about layers of memory and feedback. At the end of the day, one of my colleagues asked if the Cognitive Radar is paralleled by the visual brain then where is the transmitter ? Auditory brains of bats might have been a better analogy.

The last talk before lunch Marco Martorella talked about ISAR and how ISAR techniques can be used to sharpen up blurred SAR images of targets under motion, such as moored ships rocking. However the ISAR results seem to flatten the image to a given projection plane and issues concerning 3D structure estimation came up. There seems to be no acceptable solution to this yet. May be some of the work done in Computer vision can help in this regard, particularly Posit and friends.

The energy level dropped a bit after the lunch break. Several people from DSTO took off to get back to work, some came in. The mixture in the audience changed slightly. The afternoon talks covered Raytheon, BoM and the Super-DARN system.

The guy from Raytheon talked about their huge scope in Radar systems, from the tiny targeting radars in aircrafts to GigaWatt beasts which require their own nuclear power plant. As well as their obsession with Gallium-Nitride and heat dissipation.

BoM fellow talked about the weather radar network which we have all come to know and love. Starting from the origins in World War II to the networked and highly popular public system it is today. They seemed to have a projective next hour forecast of precipitation along the lines I had imagined before, but only for distribution to air-traffic control and such.

The last presentation about SuperDARN had the most animated sparkle I have seen in a Radar presentation. It featured coronal discharges from the sun, interactions with the Earth's magnetic field (without which we would be pretty char grilled) and results of the fluctuations in the polar aurora.

Overall it was pretty interesting and diverse day. At the end of the day we were left discussing the vagaries of finishing a PhD and the similarities to Xeno's Paradox.

I found the first talk most interesting, probably beacuse I was most attentive at the beginning of the day and it presented an irrevocable commercial success of Radar research. GroundProbe discussed their mine wall stability radar and how it came into being from concept to highly user friendly truck mountable versions.

The next talk was from Simon Haykin, dealing with the superbly abstracted Cognitive Radar. He kept drawing parallels with the visual brain and talking about layers of memory and feedback. At the end of the day, one of my colleagues asked if the Cognitive Radar is paralleled by the visual brain then where is the transmitter ? Auditory brains of bats might have been a better analogy.

The last talk before lunch Marco Martorella talked about ISAR and how ISAR techniques can be used to sharpen up blurred SAR images of targets under motion, such as moored ships rocking. However the ISAR results seem to flatten the image to a given projection plane and issues concerning 3D structure estimation came up. There seems to be no acceptable solution to this yet. May be some of the work done in Computer vision can help in this regard, particularly Posit and friends.

The energy level dropped a bit after the lunch break. Several people from DSTO took off to get back to work, some came in. The mixture in the audience changed slightly. The afternoon talks covered Raytheon, BoM and the Super-DARN system.

The guy from Raytheon talked about their huge scope in Radar systems, from the tiny targeting radars in aircrafts to GigaWatt beasts which require their own nuclear power plant. As well as their obsession with Gallium-Nitride and heat dissipation.

BoM fellow talked about the weather radar network which we have all come to know and love. Starting from the origins in World War II to the networked and highly popular public system it is today. They seemed to have a projective next hour forecast of precipitation along the lines I had imagined before, but only for distribution to air-traffic control and such.

The last presentation about SuperDARN had the most animated sparkle I have seen in a Radar presentation. It featured coronal discharges from the sun, interactions with the Earth's magnetic field (without which we would be pretty char grilled) and results of the fluctuations in the polar aurora.

Overall it was pretty interesting and diverse day. At the end of the day we were left discussing the vagaries of finishing a PhD and the similarities to Xeno's Paradox.

Wednesday, November 10, 2010

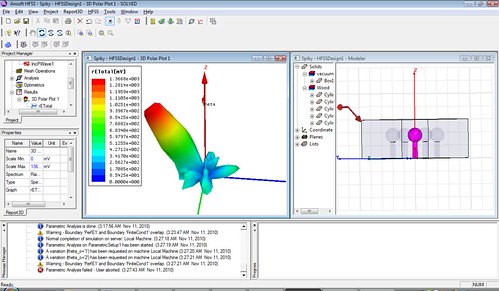

More Lollypop forests - in HFSS

Heath from our lab gave me a primer in Ansoft HFSS and since then I have been muddling along to try and represent a forest for SAR imaging. I managed to get some of my ngPlant created models into it via STL from Blender, but beyond that HFSS was totally non-cooperative. The model refused to be healed to close any of the perceived holes etc. So my only option was ot make some Lollypop trees like before using the supplied primitives, in 3D this time. Fortunately HFSS allows quick duplication, scaling etc. of a single primitive and links them with transform nodes. In the future this will allow more stochastic forest generation rather than a cookie cutter one.

FEM kicks in beyond this point and calculates the scattered far fields with plane wave excitation. As expected due to the perfectly conducting ground plane most of the energy radiates away, but there is still some significant return towards the illuminating direction.The nice change from FEKO is that the fields are calculated in all directions, not just the illuminating direction. So this setup can be used to bistatic imaging scenarios as well.

Sunday, November 7, 2010

Single Axis Stability in QuadCopter

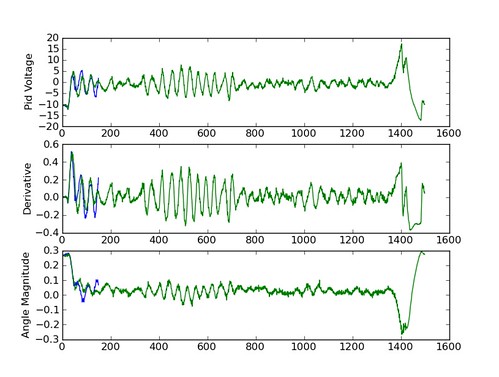

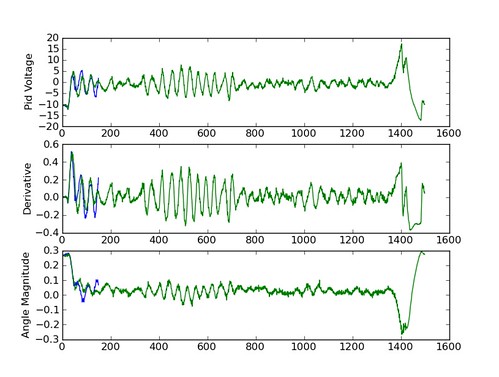

The Masters student working on the QuadCopter stability system had their final seminar last week. They have been hampered over the past year with an overly complex system design that I put together for them, language skills and lack of time due to coursework commitments. In spite of all this they managed to get a pretty good understanding of the electromechanical system, produced system models and controllers in simulink. The basic code is now up in 3 modules on google code - adelaide quadcopter project.

The final controller they ended up using was a basic Proportional-Derivative (PD) controller. The integral part leads to tighter approach to the set point, but is not always necessary. There being 4 degrees of control the quadcopter can be stabilized in yaw,pitch, roll and height. Basically a fully stable system should be able to hover. Linear motion on X and Y can be achieved by dropping it into an unstable state. This can be implemented as a state-space control, a multidimensional extension of the PD.

The final controller they ended up using was a basic Proportional-Derivative (PD) controller. The integral part leads to tighter approach to the set point, but is not always necessary. There being 4 degrees of control the quadcopter can be stabilized in yaw,pitch, roll and height. Basically a fully stable system should be able to hover. Linear motion on X and Y can be achieved by dropping it into an unstable state. This can be implemented as a state-space control, a multidimensional extension of the PD.

The single axis stability is not too bad as it comes out here, could be better though. There is an unstable region with +/- 18 Degrees oscillation. At times given low damping the oscillations tend to build up.

The final controller they ended up using was a basic Proportional-Derivative (PD) controller. The integral part leads to tighter approach to the set point, but is not always necessary. There being 4 degrees of control the quadcopter can be stabilized in yaw,pitch, roll and height. Basically a fully stable system should be able to hover. Linear motion on X and Y can be achieved by dropping it into an unstable state. This can be implemented as a state-space control, a multidimensional extension of the PD.

The final controller they ended up using was a basic Proportional-Derivative (PD) controller. The integral part leads to tighter approach to the set point, but is not always necessary. There being 4 degrees of control the quadcopter can be stabilized in yaw,pitch, roll and height. Basically a fully stable system should be able to hover. Linear motion on X and Y can be achieved by dropping it into an unstable state. This can be implemented as a state-space control, a multidimensional extension of the PD.The single axis stability is not too bad as it comes out here, could be better though. There is an unstable region with +/- 18 Degrees oscillation. At times given low damping the oscillations tend to build up.

Subscribe to:

Comments (Atom)